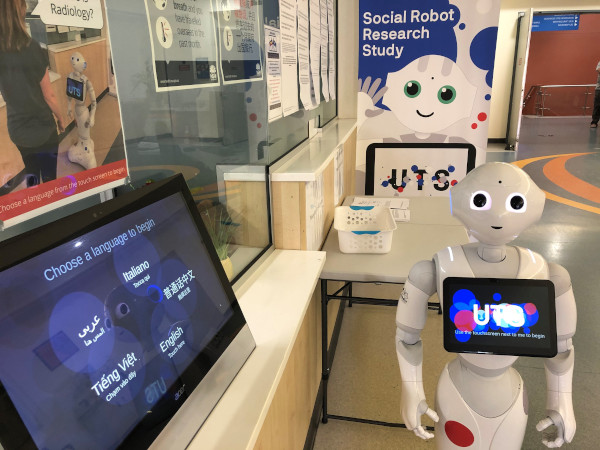

We recently conducted a research study with social robots at Fairfield hospital in Sydney.

We designed the experience and then implemented the intelligent distributed system. It combines a Softbank Pepper robot, a touch screen kiosk and several edge computing devices.

The robots alleviate stress and contribute to the welcoming atmosphere at the hospital.

From a technology perspective, the system included a number of novel features:

- Multi-lingual speech recognition, speech production and natural language processing

- Seamless multi-modal interaction using speech, gestures, video and touch (users can engage with the robot, the robot touch screen and/or the attached touch-screen kiosk)

- An intelligent "user engagement" subsystem that detects approaching people, maintains eye contact and responds through gestures

- A distributed system that offloads speech processing and image processing to nearby edge devices equipped with GPUs

- A visually appealing "organic" graphical interface that updates in real time (e.g., as partial speech recognition is performed)

- An web-based management interface that enables user-experience designers to update system behaviors while the system is running (i.e., without restarts/reboots and without writing code)

IT News wrote a good article about it.